Budmonde Duinkharjav

Дуйнхаржавын Будмондэ

budmonde@gmail.com

/in/budmonde

@budmonde.bsky.social

@budmonde

budmonde

... if it exists, it's "budmonde".

Resume

Google Scholar

Last Update: Jun 4, 2025

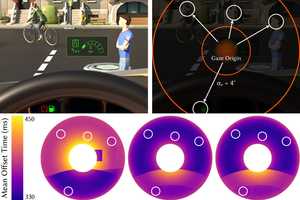

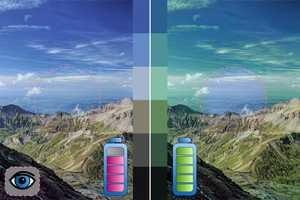

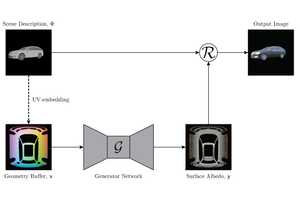

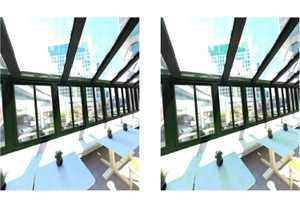

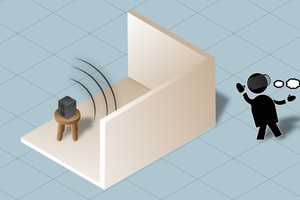

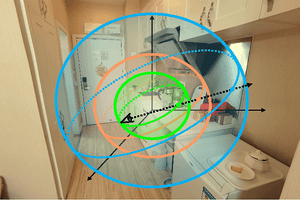

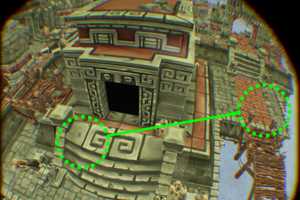

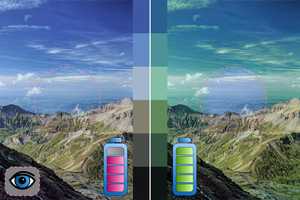

I am a PhD graduate at NYU's Immersive Computing Lab, advised by Prof. Qi Sun. My research is focused around studying the relationship between what we observe in our surroundings and how its perception affects our behavior and ability to perform visual tasks in the context of comptuer graphics applications. More broadly, I'm interested in how research on human perception can be leveraged to augment and improve computer graphics systems to aide in our daily lives.

Prior to starting my studies at NYU in Spring 2021, I received my BS and MEng degrees in Computer Science and Engineering at MIT in 2018 and 2019 respectively. During my time at MIT, I was part of the Computer Graphics Group at CSAIL, and was advised by Prof. Fredo Durand, where I worked on incorporating differentiable ray tracing into machine learning pipelines.

Updates

Publications

Posters and Demos

Teaching Experience

MIT 6.815/865: Digital and Computational Photography: Teaching Assistant, Spring 2019

MIT 6.858: Computer Systems Security: Teaching Assistant, Spring 2018

MIT 6.148: WebLab: Introduction to Web Programming: Co-Instructor

Lecture Videos:

Winter 2017

and

Winter 2018