Color-Perception-Guided Display Power Reduction for Virtual Reality

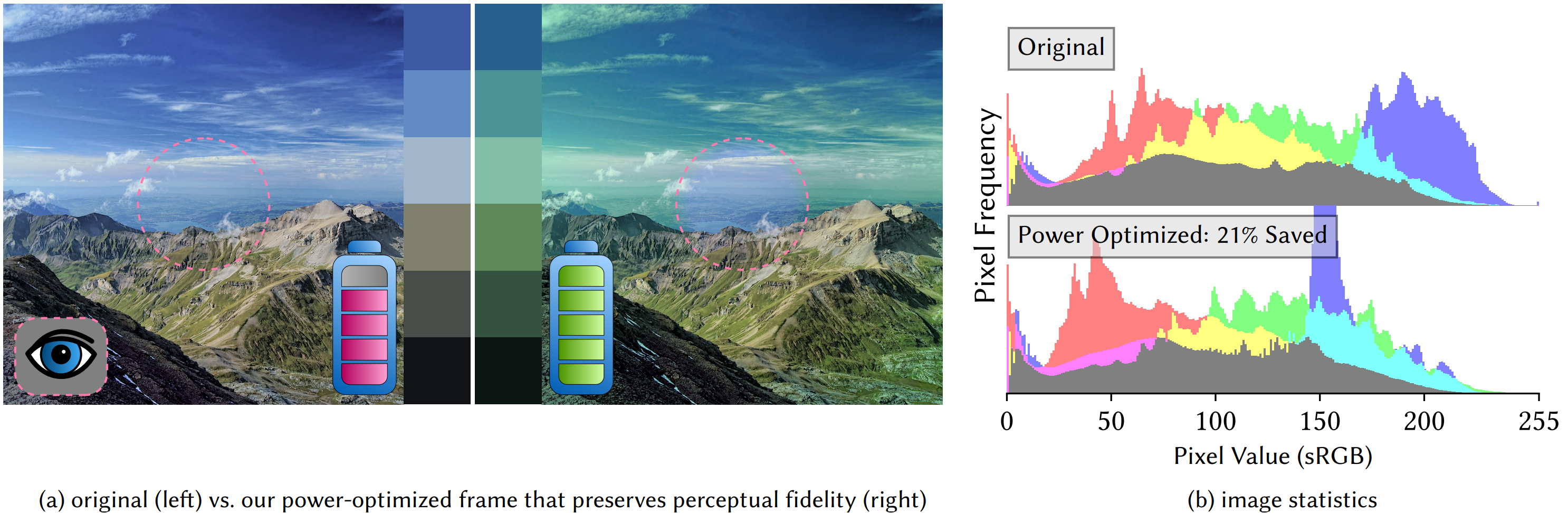

We present a perceptually-guided, real-time, and closed-form model for minimizing the power consumption of untethered VR displays while preserving visual fidelity. We apply a gaze-contingent shader onto the original frame ((a) left) to produce a more power efficient frame ((a) right) while preserving the luminance level and perceptual fidelity during active viewing. The dashed circles indicate the user’s gaze. Our method is jointly motivated by prior literature revealing that: i) the power cost of displaying different colors on LEDs may vary significantly, even if the luminance levels remain unchanged [Dong and Zhong 2011]; ii) human color sensitivity decreases in peripheral [Hansen et al. 2009] and active vision [Cohen et al. 2020]. The color palette of the original frame is modulated using our peripheral filter and is shown in (a) for a visual comparison. While the color palettes appear different when gazed upon, an observer cannot discriminate between them when shown in their periphery. (b) visualizes how our model shifts the image’s chromatic histograms to minimize the physically-measured power consumption. The blue LEDs consume more energy than the red/green in our experiment display panel. Image credits to Tim Caynes © 2012.

Abstract

Battery life is an increasingly urgent challenge for today's untethered VR and AR devices. However, the power efficiency of head-mounted displays is naturally at odds with growing computational requirements driven by better resolution, refresh rate, and dynamic ranges, all of which reduce the sustained usage time of untethered AR/VR devices. For instance, the Oculus Quest 2, under a fully-charged battery, can sustain only 2 to 3 hours of operation time. Prior display power reduction techniques mostly target smartphone displays. Directly applying smartphone display power reduction techniques, however, degrades the visual perception in AR/VR with noticeable artifacts. For instance, the "power-saving mode" on smartphones uniformly lowers the pixel luminance across the display and, as a result, presents an overall darkened visual perception to users if directly applied to VR content.

Our key insight is that VR display power reduction must be cognizant of the gaze-contingent nature of high field-of-view VR displays. To that end, we present a gaze-contingent system that, without degrading luminance, minimizes the display power consumption while preserving high visual fidelity when users actively view immersive video sequences. This is enabled by constructing 1) a gaze-contingent color discrimination model through psychophysical studies, and 2) a display power model (with respect to pixel color) through real-device measurements. Critically, due to the careful design decisions made in constructing the two models, our algorithm is cast as a constrained optimization problem with a closed-form solution, which can be implemented as a real-time, image-space shader. We evaluate our system using a series of psychophysical studies and large-scale analyses on natural images. Experiment results show that our system reduces the display power by as much as 24% (14% on average) with little to no perceptual fidelity degradation.

Introduction Video

Citation

Budmonde Duinkharjav, Kenneth Chen, Abhishek Tyagi, Jiayi He, Yuhao Zhu, Qi Sun

Color-Perception-Guided Display Power Reduction for Virtual Reality

ACM Transactions on Graphics 41(6) (Proceedings of ACM SIGGRAPH Asia 2022)

BibTeX

Downloads

Paper

Supplementary Material

Code

Data Coming soon!

Acknowledgements

The work was supported, in part, by the National Science Foundation (NSF) under grants #2225861 and #2044963.